How to Nail your next Technical Interview

You may be missing out on a 66.5% salary hike*

Interview Kickstart has enabled over 3500 engineers to uplevel.

Can we trust every piece of data at our disposal to be accurate? Every business, no matter regardless of size, needs data. However, without reliable data, organizations would not be able to decide what is best for their consumers and internal operations. The first and most important stage in any machine learning method is data preprocessing. Businesses may obtain clean, correct data to train their algorithms on and work toward better services with the use of data preprocessing techniques in machine learning.

Data preprocessing is an operation that entails converting unprocessed data to address concerns related to its insignificance, regularity, or the absence of a proper statistical description to produce a dataset that can be analyzed in an accessible format. Data preprocessing techniques have been developed to help train artificial intelligence and machine learning models and make predictions using them.

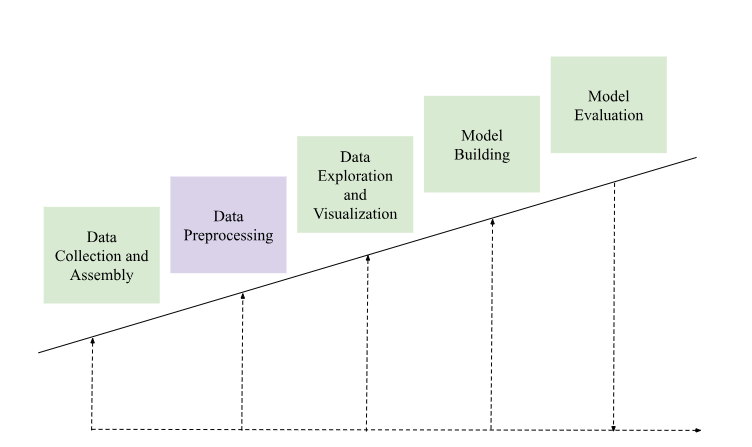

Data preprocessing changes the data into a form that can be analyzed in data mining, machine learning, and other data science operations with greater effectiveness and speed. The techniques are typically applied at the beginning of the machine learning and AI development lifecycle to ensure reliable findings.

Text, photos, video, and other types of unprocessed, real-world data are unstructured. In addition to having the potential to be inaccurate and inconsistent, it can often be lacking in essential information and lacks a consistent, regular layout.

Machines prefer to work with neat and orderly data; they process data as 1s and 0s. Therefore, it is simple to calculate structured data such as whole numbers and percentages. However, raw information needs to be filtered and prepared in the form of text and graphics before processing.

Algorithms that gain insight from data are basically statistical computations that depend on information from a database. Therefore, as the expression states, “if waste enters, waste comes out.” Only high-quality data fed into the computers can make the data projects effective.

If you happen to overlook the data preprocessing stage, it will have an impact on your results subsequent to when you decide to use this dataset in a machine learning framework. The majority of models are unable to manage missing values.

Here are some of the reasons why different data preprocessing techniques are needed for the machine learning model:

Data is the most important component of machine learning models. Good quality data would provide the best quality results for any organization. Certain factors make quality data using the data preprocessing techniques in machine learning, such as the following:

For the major data preprocessing techniques, machine learning, Python is the most widely used language. The data preprocessing techniques include:

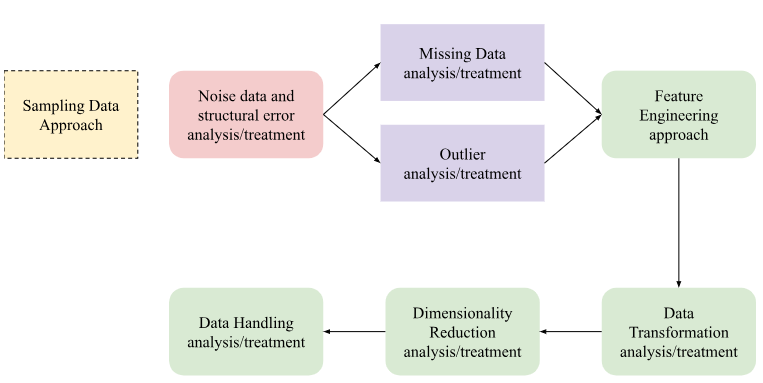

Finding and correcting flawed and erroneous information from your dataset is one of the most essential elements of the data preprocessing procedure for enhancing its overall quality. Data cleaning will resolve all inconsistencies discovered during the data quality review. Data inaccuracies might develop due to human mistakes (the data was recorded in the incorrect field). Deduplication is the process that could be used to eliminate duplicate values to prevent bias in that data item. Based on the type of data you have to work with, you might have to run the data across several kinds of cleaners.

A practical dataset usually consists of a large number of variables; if we do not reduce this quantity, it can have an impact on how well the model performs when we eventually input this dataset. Decreasing the number of features while retaining as much diversity in the dataset as achievable is likely to have a favorable influence on many kinds of aspects.

You can implement different dimensionality reduction methods to improve our data for future use.

The feature engineering strategy is utilized to develop enhanced features for the data set that will boost the efficiency of the model. Those features are manually generated from the present features by performing a few modifications on them. We mostly depend on domain-specific knowledge for generating these features. The process of feature engineering is commonly used where the initial features are complicated and high-dimensional. Techniques like PCA, linear discriminant analysis (LDA), and non-negative matrix factorization (NMF) can be used to accomplish this.

Data transformation is the process of converting data between different formats. Certain algorithms require that the input data be modified; hence, forgetting to do this could result in inadequate model accuracy or even biases. Standardization, normalization and discretization are standard data transformation processes. While standardization modifies data to have a zero mean and unit variance, normalization ranges data to a uniform spectrum. Discretization is the process through which ongoing data is converted into distinct groups.

When working with practical data grouping, one of the main prevalent challenges is that the categories are unbalanced, which strongly biases the model. The major techniques used in data handling are:

It will not be an overstatement if we say, “Data is everything.” Every organization or business needs data to improve their products and services. Data cleaning is always an important step in every operation. Machine learning models are important for major operations. Data preprocessing techniques in machine learning help prepare ideal data for the functioning of the models. Interview Kickstart has always been at the forefront of helping enthusiast data scientists to learn and explore machine learning in-depth and get into their desired company. Join our machine learning program to excel in data preprocessing and other machine learning techniques.

The major steps in data preprocessing are data cleaning, data integration, data reduction and data transformation.

There are two types of data preprocessing: data cleaning and feature engineering.

Data scientists use exploratory data analysis (EDA), which often includes the use of data visualization techniques, to explore and analyze data sets and highlight their key properties.

The basic NLP preprocessing includes sentence segmentation, lowercasing, word tokenization, stemming or lemmatization, stop word removal, and spelling correction.

Pre-processing scripts in machine learning are executed in advance of the value and validity rules verification, while post-processing scripts are executed following these operations.

Attend our webinar on

"How to nail your next tech interview" and learn

.png)